Researchers Hid Malware Inside an AI's 'Neurons' and It Worked Scarily Well

Neural networks could be the next frontier for malware campaigns as they become more widely used, according to a new study. The study, which was posted to the arXiv preprint server on Monday, found that malware can be embedded directly into the artificial neurons that make up machine-learning models in a way that keeps them from being detected. The neural network would even be able to continue performing its set tasks normally.

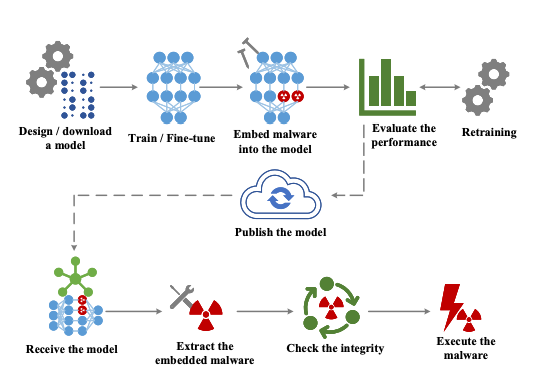

Overal workflow of how malware can be embeded into a model. arXiv"As neural networks become more widely used, this method will be universal in delivering malware in the future," the authors, from the University of the Chinese Academy of Sciences, write. Using real malware samples, their experiments found that replacing up to around 50 percent of the neurons in the AlexNet model-a benchmark-setting classic in the AI field-with malware still kept the model's accuracy rate above 93.1 percent. The authors concluded that a 178MB AlexNet model can have up to 36.9MB of malware embedded into its structure without being detected using a technique called steganography. Some of the models were tested against 58 common antivirus systems and the malware was not detected.

Overal workflow of how malware can be embeded into a model. arXiv"As neural networks become more widely used, this method will be universal in delivering malware in the future," the authors, from the University of the Chinese Academy of Sciences, write. Using real malware samples, their experiments found that replacing up to around 50 percent of the neurons in the AlexNet model-a benchmark-setting classic in the AI field-with malware still kept the model's accuracy rate above 93.1 percent. The authors concluded that a 178MB AlexNet model can have up to 36.9MB of malware embedded into its structure without being detected using a technique called steganography. Some of the models were tested against 58 common antivirus systems and the malware was not detected.

Other methods of hacking into businesses or organizations, such as attaching malware to documents or files, often cannot deliver malicious software en masse without being detected. The new research, on the other hand, envisions a future where an organization may bring in an off-the-shelf machine learning model for any given task (say, a chat bot, or image detection) that could be loaded with malware while performing its task well enough not to arouse suspicion...

- Tags:

- AI-assisted attacks

- AlexNet model

- artificial intelligence (AI)

- artificial neurons

- arXiv preprint server

- Chaoge Liu

- cybersecurity

- deep neural network model

- delivering malicious software

- delivering malware

- Lukasz Olejnik

- machine-learning models

- malicious receiver program

- malware campaigns

- malware-loaded machine

- Motherboard

- neural network-assisted attacks

- neural networks

- poisoned machine learning model

- Radhamely De Leon

- steganography

- supply chain pollution

- University of the Chinese Academy of Sciences

- Xiang Cui

- Zhi Wang

- Login to post comments