Is Machine Learning for the Birds?

Clare McLennanCacophony Project uses the latest technology to monitor and protect endangered bird populations against predators.

Clare McLennanCacophony Project uses the latest technology to monitor and protect endangered bird populations against predators.

The Cacophony Project's broad vision is to bring back New Zealand's native birds using the latest technology to monitor bird populations and humanely eliminate the introduced predators that are endangering them.

The project started in our founder's backyard to measure the effectiveness of his efforts to protect the birds on his property. From this simple beginning, the project has quickly grown into a system that includes two edge devices, a cloud server, and automatic identification of animals using machine learning. The project has been completely open source from the beginning and sees regular contributions from a wide variety of volunteers.

What makes New Zealand's birds so special?

In New Zealand, our birds are our taonga, our precious things. New Zealand was isolated from the rest of the world for 70-million years, and our bird species evolved free from the presence of mammals (aside from small bats). As a result, many of New Zealand's unique bird species, including our national icon, the kiwi, do not have defense strategies against predators.

Menno Finlay-SmitsUnfortunately, the introduction of invasive species such as rats, stoats, and possums after European settlement over the last couple of centuries has decimated the population of many bird species. Now, 80% of native bird species are already endangered or in decline.

Menno Finlay-SmitsUnfortunately, the introduction of invasive species such as rats, stoats, and possums after European settlement over the last couple of centuries has decimated the population of many bird species. Now, 80% of native bird species are already endangered or in decline.

Over the years, conservationists have rescued many of our iconic species from the brink of extinction by relocating remaining populations to predator-free islands. These islands have been painstakingly cleared of all introduced mammals. On these islands, you can step back in time and hear the loud cacophony of birdsong that greeted the first European explorers.

These islands have been so wildly successful that they are now full to the brim, and conservationists have started to create predator-free sanctuaries on the mainland. The government has recently set the ambitious target to make New Zealand free of rats, stoats, and possums by 2050.

Not just conservationists are fighting for our birds. In recent years, many everyday New Zealanders have formed local groups to work together to trap predators in the hope of hearing our native birds' melodic birdsong in their backyards.

We believe we can make these groups far more effective by modernizing the tools they are using. By using digital lures combined with more effective elimination methods, we think these programs could be up to 80,000 times more effective.

Tui birdOur technological ecosystem

Tui birdOur technological ecosystem

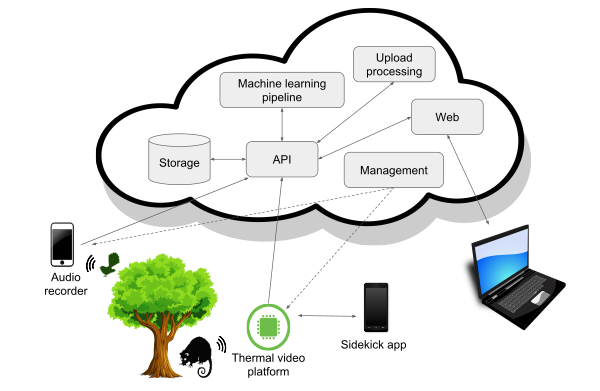

Over the past few years, using an open source, rapid prototyping approach, we have built up an ecosystem of components for automated monitoring of bird populations and predators introduced to New Zealand. The system is summarized in the diagram below.

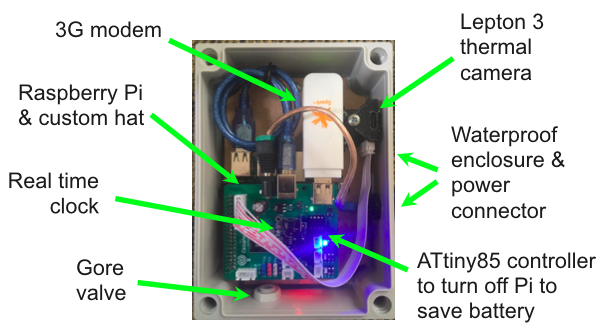

Our thermal video platform allows us to monitor and observe pest species' behavior. It is specifically designed to detect small and fast-moving predators such as stoats and rats. It features a ruggedized Raspberry Pi computer paired with a FLIR Lepton 3 thermal camera. Power is supplied by either batteries or AC power, and additional custom hardware provides power and watchdog capabilities, audio output to lure predators and remote communication.

Our highly tuned motion detection algorithm watches the live thermal camera feed and detects warm, moving objects (hopefully an animal). When movement is detected, the camera begins recording and uploads it to the Cacophony Project API server for storage and analysis. By using the thermal camera feed, we can detect small mammals with much higher sensitivity than trail cameras (typically designed to detect larger mammals such as pigs and deer) could.

Kiwi birdHigh sensitivity is important because we can't manage what we can't detect. Mammals such as stoats are otherwise difficult to detect because they are small and fast and rarely interact with other detection devices such as tracking tunnels and chew cards.

Kiwi birdHigh sensitivity is important because we can't manage what we can't detect. Mammals such as stoats are otherwise difficult to detect because they are small and fast and rarely interact with other detection devices such as tracking tunnels and chew cards.

We also have created an Android audio recorder that runs on inexpensive mobile phones. When installed in the field, it makes regular, objective, GPS-located, time-stamped audio recordings that are uploaded to our API server. These recordings are ideal for monitoring bird populations over time.

Eventually, we plan to use machine learning on these recordings to determine bird population trends for a given location and to automatically identify bird species where possible. While we continue to work on the AI, we are already recording at several locations around the country to build up year-on-year datasets.

Architecture showing the interactions between different components of the Cacophony ecosystem.The captured audio and thermal video recordings are uploaded to our API server for storage and processing. Our machine learning pipeline processes the thermal video recordings and tags the animals that appear. All of the recordings are also converted to easily viewed formats.

Architecture showing the interactions between different components of the Cacophony ecosystem.The captured audio and thermal video recordings are uploaded to our API server for storage and processing. Our machine learning pipeline processes the thermal video recordings and tags the animals that appear. All of the recordings are also converted to easily viewed formats.

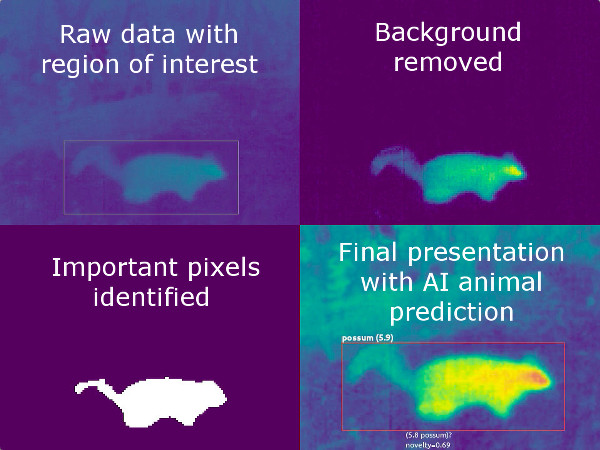

The machine learning pipeline enables automatic identification of predators and is one aspect that makes our approach especially interesting. Uploaded thermal video recordings are first analyzed to look for warm, moving objects in each frame. These areas are clipped from each frame and linked together through time to form what we call "tracks."

Thermal video platform hardware.These tracks are then classified using a convolutional recurrent neural network (CRNN) model implemented using the popular TensorFlow platform. The model is trained using human-tagged tracks broken into three-second chunks. The input tracks are stretched, flipped, and clipped to increase the variety of input data and make the model more robust in practical use.

Thermal video platform hardware.These tracks are then classified using a convolutional recurrent neural network (CRNN) model implemented using the popular TensorFlow platform. The model is trained using human-tagged tracks broken into three-second chunks. The input tracks are stretched, flipped, and clipped to increase the variety of input data and make the model more robust in practical use.

When classifying, the model is fed each frame individually from a track to produce the classification probabilities for each animal class at every point in time. The recurrent structure of the model means that its current output is influenced by previous outputs. This allows the model to account for the way an animal moves over time, greatly increasing classification accuracy. This intuitively makes sense: Human observers often find it difficult to identify an animal from a single video frame, but seeing the animal move for a few seconds usually gives the species away.

The steps to identify animals in a thermal image. The clipped image is fed into our machine learning algorithm, which computes a prediction for the animal species.We created a web platform so users can view and listen to recordings made on their devices. Through this platform, users can also manually tag animals in videos and set up playback of audio to lure animals closer to the camera.

The steps to identify animals in a thermal image. The clipped image is fed into our machine learning algorithm, which computes a prediction for the animal species.We created a web platform so users can view and listen to recordings made on their devices. Through this platform, users can also manually tag animals in videos and set up playback of audio to lure animals closer to the camera.

The final pieces of our system are device management tools. Our management server allows us to monitor the uptime of online devices and push out new versions of software and configuration to our devices.

Finally, we have Sidekick, an Android app that helps users set up the thermal cameras. For devices without mobile data coverage, Sidekick can wirelessly collect thermal recordings and upload them later when internet connectivity is available.

Our next moves

With a small team and minimal management, we move quickly to iterate, discover, and improve. There are so many good ideas floating around that one of the hardest things is for us to decide what to work on next. We always have to balance trying out new ideas with making our technology more reliable and easier for people to use.

Currently, we are working on adapting our artificial intelligence (AI) model to run on the thermal camera hardware to allow autonomous classification of predators. Once this is done, we plan to pair the camera with traps that trigger only when the camera identifies a target species. This allows for more effective trapping strategies that increase predator catch rates while eliminating by-catch of non-target animals.

Other upcoming projects include visualizations of our ever-increasing set of recordings, applying machine learning to evaluate birdsongs in audio recordings, and new device management features. We also have many other ideas to try out, both big and small-the team is never bored!

If you are keen to learn more, visit the Cacophony Project website. If you'd like to contribute, please use the contact form or email addresses listed there. With the project moving so fast, contacting us directly is usually the easiest way to identify the most interesting and useful ways for you to help.

| Is Machine Learning for the Birds? was authored by Clare McLennan and Menno Finlay-Smits and published in Opensource.com. It is republished by Open Health News under the terms of the Creative Commons Attribution-ShareAlike 4.0 International License (CC BY-SA 4.0). The original copy of the article can be found here. |

- Tags:

- artificial intelligence (AI)

- bird population trends

- bird populations

- cacophony of birdsong

- Cacophony Project

- Cacophony Project API server

- Clare McLennan

- convolutional recurrent neural network (CRNN)

- endangered bird populations

- FLIR Lepton 3 thermal camera

- Kiwi bird

- live thermal camera feed

- machine learning

- Menno Finlay-Smits

- motion detection algorithm

- New Zealand

- open source

- Raspberry Pi

- taonga

- TensorFlow platform

- Tui bird

- Login to post comments